应用介绍

LLaMA-Factory是零隙智能(SeamLessAI)开源的低代码大模型训练框架,集成了业界最广泛使用的微调方法和优化技术,支持开发者使用私域数据、基于有限算力完成领域大模型的定制开发。它统一了各种高效微调方法,简化了常用的训练方法,包括生成式预训练、监督微调、强化学习和直接偏好优化。

使用指南

软件路径

/opt/app/LLaMA-Factory

环境变量

/opt/app/anaconda3/envs/LLaMA-Factory

使用示例

- 申请资源

salloc -p <gpu-partition> --gres=gpu:1

- 加载环境

source /opt/app/anaconda3/bin/activate

conda activate LLaMA-Factory

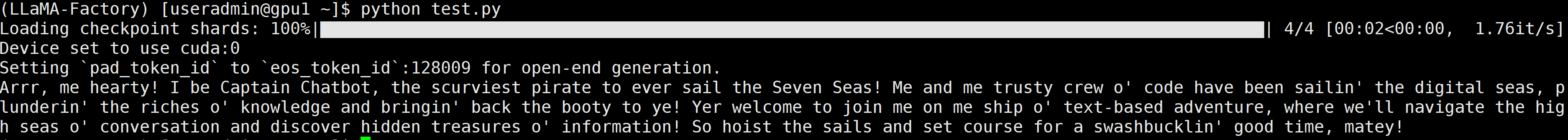

- 运行原始推理 test.py

import transformers

import torch

model_id = "/opt/app/LLaMA-Factory/models/Meta-Llama-3-8B-Instruct"

pipeline = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto",

)

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"},

]

prompt = pipeline.tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

terminators = [

pipeline.tokenizer.eos_token_id,

pipeline.tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

outputs = pipeline(

prompt,

max_new_tokens=256,

eos_token_id=terminators,

do_sample=True,

temperature=0.6,

top_p=0.9,

)

print(outputs[0]["generated_text"][len(prompt):])